For remembering my neutron knowledge and discovering the latest(for at least last 4-5 years:)) networking features, I have chosen GNS3 as a lab environment and Packstack as it seems a quick way of deployment. I need to recap provider/tenant network usage, L2 population, DVR, floating ip, etc. with OVS, and more over with OVN. Later on, will try to use new BGP features.

My path:

- Enabled KVM accell on GNS3.

- Used Centos Stream 9: CentOS-Stream-GenericCloud-9-20230116.0.x86_64.qcow2

- For the VM’s advanced settings; -cpu host -accel kvm.

- For VM setup:

- Packstack requires old network service. But on Centos Stream 9 it is not listed in repos any more. So before network script installation step, I have just installed openstack on all nodes even its not required on compute nodes. Then tried to change network service and successfuly install network scripts.

- Also SE linux need to be disabled. The option in the packstack document just shanges the value of it on run time. (I have also tried to install with SE linux enabled. It worked somehow but took a longer time to install).

- nano /etc/selinux/config —> disabled

- grubby –update-kernel ALL –args selinux=0

- reboot the machine.

- Then just installed packstack on one of controller nodes.

- After changing the hostnames, I have also edit the /etc/hosts file to include 127.0.0.1 with new hostname. Never included the management ip addresses in /etc/hosts as it recommended in some blogs.

- Changed interface settings to static ip addresses assigments. Also if you are using single interface, which is also used for internet connnection(NAT cloud on GNS3), during packstack installation this interface configuration is moved to br-ex interface. At this step machine lost the DNS configuration (happens occasionally). So I have just edit the /etc/resolve.conf with a static DNS like 1.1.1.1.

- Also allow sshd to root access.

- Reboot the machines last tone time, check internet access.

- Run the packstack.

- packstack will generate a asnwer file which contains configuration options used for deploying openstack on hosts. Becarefull when running packstack multiple times. You should use the initial used at the first deployment. Generally I have used command line for the first installtion and later on change the answer file generated at that time first time to add addtional compute node e.t.c.

Multi node OVS installation:

with ovs

packstack --allinone --provision-demo=n \

--os-cinder-install=n --os-ceilometer-install=n \

--os-trove-install=n --os-ironic-install=n \

--os-swift-install=n --os-aodh-install=n \

--os-neutron-l2-agent=openvswitch \

--os-neutron-ml2-mechanism-drivers=openvswitch \

--os-neutron-ml2-tenant-network-types=vxlan,vlan \

--os-neutron-ml2-type-drivers=vxlan,flat,vlan \

--os-neutron-ovs-bridge-mappings=extnet:br-ex,physnet1:br-vlan \

--os-neutron-ovs-bridge-interfaces=br-ex:eth2,br-vlan:eth3 \

--os-neutron-ml2-vlan-ranges=physnet1:101:103 \

--os-neutron-ovs-bridges-compute=br-ex,br-vlan \

--os-network-hosts=192.168.122.49 \

--os-compute-hosts=192.168.122.4,192.168.122.219 \

--os-neutron-ovs-tunnel-if=eth1

with ovn

packstack --allinone --provision-demo=n \ --os-cinder-install=n \ --os-ceilometer-install=n \ --os-trove-install=n \ --os-ironic-install=n \ --os-swift-install=n \ --os-aodh-install=n \ --os-neutron-ml2-type-drivers=vxlan,flat,vlan,geneve \ --os-neutron-ml2-tenant-network-types=vxlan,vlan,geneve \ --os-neutron-ml2-mechanism-drivers=ovn \ --os-neutron-ovn-bridge-mappings=extnet:br-ex,physnet1:br-vlan \ --os-neutron-ovn-bridge-interfaces=br-ex:eth2,br-vlan:eth3 \ --os-neutron-ml2-vlan-ranges=physnet1:101:103 \ --os-neutron-ovn-bridges-compute=br-ex,br-vlan \ --os-neutron-ovn-tunnel-if=eth1 \ --os-neutron-ovn-tunnel-subnets=192.168.10.0/24 \ --os-controller-host=192.168.122.192 \ --os-compute-hosts=192.168.122.106,192.168.10.154 Enabling DVR (OVN): There is no such variable --distributed for ovn routers. Just enable DVR. ml2.ini--> [ml2] type_drivers=vxlan,flat,vlan,geneve tenant_network_types=geneve mechanism_drivers=ovn path_mtu=0 extension_drivers=port_security,qos [securitygroup] enable_security_group=True [ml2_type_vxlan] vxlan_group=224.0.0.1 vni_ranges=10:100 [ml2_type_flat] flat_networks=* [ml2_type_vlan] network_vlan_ranges=physnet1:101:103 [ml2_type_geneve] max_header_size=38 vni_ranges=10:100 [ovn] ovn_nb_connection=tcp:192.168.122.192:6641 ovn_sb_connection=tcp:192.168.122.192:6642 ovn_metadata_enabled=True enable_distributed_floating_ip=True

restart the neutron service with systemctl restart neutron-server.service

Accessing Horizon Dashboard From outside the lab env

We need to use NAT on GNS3; sudo iptables -t nat -A PREROUTING -p tcp --dport OUTSIDEPORT(5602,etc) \ -j DNAT --to-destination controller_ip:80

sudo iptables -I LIBVIRT_FWI -p tcp –dport 80 -m conntrack –ctstate NEW,ESTABLISHED -j ACCEPT

and also on controller host we need to add GNS3 ip address to be included int the httpd deamon horizon conf file;

edit /etc/httpd/conf.d/15-horizon_vhost.conf to include GNS3 outside interface address;

ServerAlias GNS3_OUT_SIDE_IP

Quick Notes about networking (OVS):

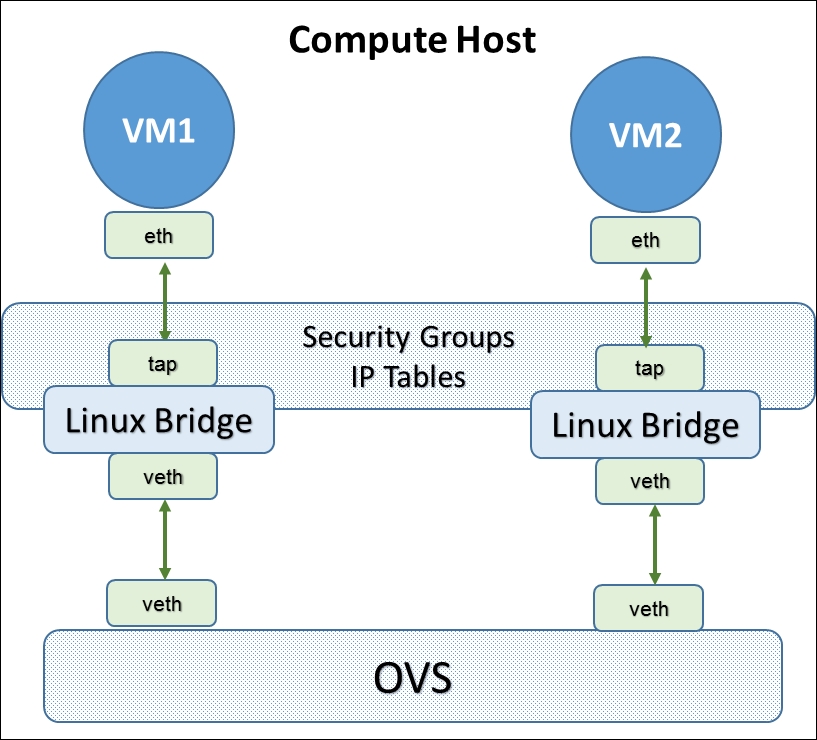

- Each VM is connected to a linux bridge for deploying security rules. Why different linux bridge for each VM? Layer 2 isolation and Layer 2 rule deployment!

- Then each VM is connected to a OVS bridge (ignore the linux bridge as they seem as an inline device between vm and this ovs bridge). This bridge is called as integration bridge, br-int. Integration bridge is mainly responsible for local connectivity. Local forwarding in same tenant (L2 connectivity), and tenant isolation using VLAN, VXLAN e.t.c. Commanly VLAN. Each tenant network will have locally significant vlan-id.

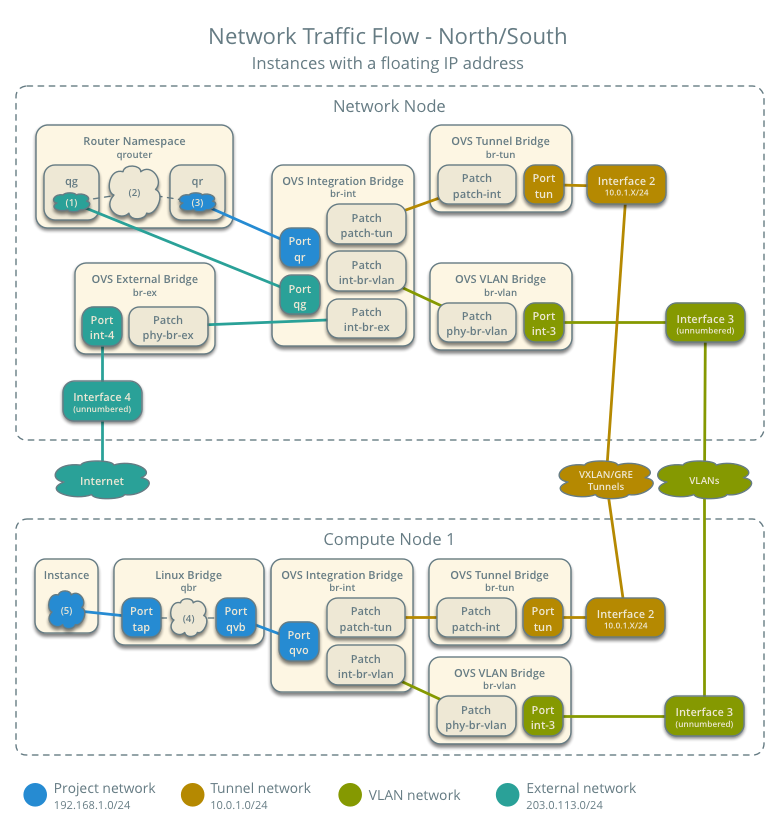

- For connecting those tenant networks which are spreaded over to compute hosts, overlay tunnels like vxlan, geneve and e.t.c are used. Thouse overlay tunnels are not directly connected to br-int! A seperate bridge is used for connecting those tunnels, br-tun. Separate logical interfaces are created for other nodes and then connected to br-tun. And also patch interface is used between br-int and br-tun. With those connection, A layer 2 domain for each tenant network is created over openstack nodes.

Another option for connecting those layer 2 domains without the vxlan tunnels and br-int is using the data center network, or an outside switching mechanism. Basically using a pyhsical interface for those layer2 domains, trunk interface:). Instead of connecintg this interface directly to the br-int, another bridge is used br-ex(name can be depend on your installation).

You may use different interface for different vlans as well. This kind of leaking of local vlans to outside world is called provider networks, where you are using outside network for accessing your layer 2 domains in the other nodes. Commanly outside network will have an ip addresses, default gateway of the virtual machines. Thus where you route traffic to outside or between virtual machines. You may use VRF and other technics for tenant isolation. Openstack documentation clearly explains the logical path with this kind of a setup: https://docs.openstack.org/neutron/zed/admin/deploy-ovs-provider.html

- Routing inside the openstack, using routers: Openstack routers are namespaces that are conncted to tenants networks via patch tun. As they are separete namespaces they have their own routing table. You can share a router between different networks under the same project.Where as sharing a router between different projects or customers is somehow cumberstone. Nevertheless logical view is like this;

Generallay central node is used for routing between networks. Routers are connected to br-int bridges via br-tun bridges. External connectivity is ashived by attacing an interface to a network that is extarnlly routable, generally br-ex is the naming. But you may also connect router to any network, for example to a provider network which I mentioned above (extarnally routed layer 2 domain). This kind of a mix to mix.

Packet walktroughs and better explanations:

Scenario: Classic with Open vSwitch

Open vSwitch: Self-service networks

Connecting an instance to the physical network

For enabling DVR: Openstack distributed router wherThis is much more straight forward. We have just installed all openstack components on all nodes for installing the network script. Thus, l3 agent is already installed on all nodes.

https://docs.openstack.org/neutron/pike/admin/config-dvr-ha-snat.html

— change neutron.conf on controller node. If you are using controller node as network, configure it accordingly.

— enable neutron-l3-agent.service on new nodes.

You should see all l3 agent on all nodes with running openstack network agent list on controller.

Basic neutron configuration files and related services:

/etc/neutron/plugins/ml2/ml2_conf.ini —> systemctl restart neutron-server.service

/etc/neutron/plugins/ml2/openvswitch_agent.ini —> systemctl restart neutron-openvswitch-agent

/etc/neutron/l3_agent.ini —> systemctl restart neutron-l3-agent.service

Reading Links:

- https://docs.openstack.org/neutron/zed/admin/deploy-ovs-provider.html

L2 population and Arp Responder: Very nice feature. As openstack know information about all VM, it may install necassray openflow rules for reducing arp traffic. But as the installation grows, number of openflow rules increases. Check for scaling issues as well when deploying these features.

- https://assafmuller.com/2014/02/23/ml2-address-population/

- https://assafmuller.com/category/ml2/

For DVR:

Basically DVR is like EVPN aysmetric routing. Using ovs flow rules, traffic between different network is switched locally by chainging the source mac of the traffic. Every router interface connected to the tenant networks uses different mac address. For example it you tenants in a network 10.0.0.0/24 network will use 10.0.0.1 for their default gateway which is router interface connected to the tenant network. In each compute node a router interface with 10.0.0.1 will be configured but will have different mac addresses.

- https://assafmuller.com/category/dvr/

- https://networkop.co.uk/blog/2016/10/13/os-dvr/

- http://blog.gampel.net/2014/12/openstack-neutron-distributed-virtual.html

- https://docs.openstack.org/neutron/zed/admin/deploy-ovs-ha-dvr.html

Old notes, inconsistent setup!!!!

Got some errors during restart of network service, found unsed inferface configuration like ens3 and deleted them for solving the problem.

I have just followed the guide from Packstack with considering https://networkop.co.uk/blog/2016/04/04/openstack-unl/

I have done a couple of different deployments using

- single host: packstack –allinone –os-neutron-l2-agent=openvswitch –os-neutron-ml2-mechanism-drivers=openvswitch –os-neutron-ml2-tenant-network-types=vxlan –os-neutron-ml2-type-drivers=vxlan,flat –provision-demo=n –os-neutron-ovs-bridge-mappings=extnet:br-ex –os-neutron-ovs-bridge-interfaces=br-ex:eth0

- multihost:packstack –allinone –provision-demo=n –os-cinder-install=n –os-ceilometer-install=n –os-trove-install=n –os-ironic-install=n –os-swift-install=n –os-aodh-install=n \ –os-neutron-l2-agent=openvswitch –os-neutron-ml2-mechanism-drivers=openvswitch –os-neutron-ml2-tenant-network-types=vxlan,vlan –os-neutron-ml2-type-drivers=vxlan,flat,vlan –os-neutron-ovs-bridge-mappings=extnet:br-ex –os-neutron-ovs-bridge-interfaces=br-ex:eth0 –os-neutron-ml2-vlan-ranges=extnet:101:103 –os-neutron-l3-ext-bridge=provider –os-compute-hosts=192.168.122.191,192.168.122.168

GNS3 notes:

- enable kvm accell on GNS3

- used CentOS-Stream-GenericCloud-8-20220913.0.x86_64.qcow2

- enabled KVM acceleration on GNS3.

- advanced options for VM: –cpu host -accel kvm

- used hdd like 20GB

- used name hdd type

- increased ram like 16GB

- disabled selinux

- for accessing openstack dashboard outside the gns3, just used NAT on GNS3 host :

- sudo iptables -t nat -A PREROUTING -p tcp –dport 5622 -j DNAT –to-destination 192.168.122.22:80 & sudo iptables -I LIBVIRT_FWI -p tcp –dport 80 -m conntrack –ctstate NEW,ESTABLISHED -j ACCEPT

- Also allow GNS3 acces interface to /etc/httpd/conf.d/15-horizon_vhost.conf under AllowedServers and reboot httpd or reboot the machine.

- left the /etc/hosts untouched

One of my topologies:

I have used two ethernets on a VM. One is used for management network and the other one is used for Openstack VM networking.

- Centos Stream 8: root with empty pass on console. After loging change passwd to sth.

- eth0 interfaces will connected to NAT network via mgmt switch. Initial setup will use dhcp on those interfaces.

- Follow basic steps of packstack installation on controller node. Compute nodes do not require openstack and packstack installation.

- Change dhcp configuration on all servers for mgmt network to static ip address configuration(use the dhcp assigned addresses on VM)

- configure eth1 for openstack vm networking.

- install openstack with packstack accourding to your setup which may take severel minutes.

- Accessing openstack dashboard out of GNS3 network.

-

You need DNAT to your openstack horizon dashboard. Lets say controller management ip address is 192.168.122.96 and running o default port 80 on controller node on GNS3 :

sudo iptables -t nat -A PREROUTING -p tcp –dport 5602 -j DNAT –to-destination 192.168.122.96:80

sudo iptables -I LIBVIRT_FWI -p tcp –dport 80 -m conntrack –ctstate NEW,ESTABLISHED -j ACCEPT

on controller: Add the GNS3 access interface or ip address to /etc/httpd/conf.d/15-horizon_vhost.conf configuration file as ServerAlias

-

- You may use different port number on controller than port 80. This will require more changes on controller.

https://stackoverflow.com/questions/30525742/how-to-change-default-http-port-in-openstack-dashboard